Ren W, Ma L, Zhang J, et al. Gated fusion network for single image dehazing[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018: 3253-3261.

1. Overview

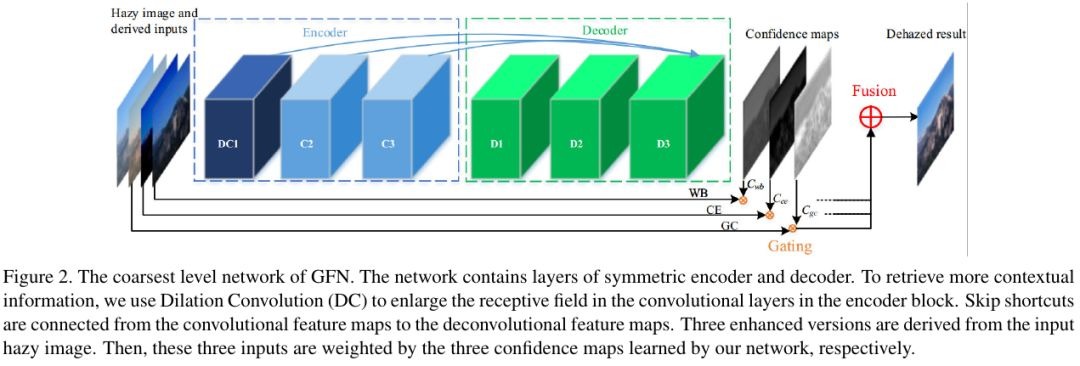

1.1. Motivation

Most existing methods follow the atmosphere scattering model.

In this paper, it proposed Gated Fusion Network (GFN)

- directly restore a clear image from a hazy image

- encoder-decoder

- fusion-based strategy + confidence maps. White Balance, Contrast Enhancing and Gamma Correlation

- multi-scale to avoid halo artifacts

- GAN loss

1.2. Major Factors of Hazy Image

- color cast introduced by atmosphere light

- lack of visibility due to attenuation

- solution

- WB. eliminating chromatic casts

- CE. better global visibility in thick hazy region but too dark in light hazy region

- GC. recover the light hazy region

1.3. Contribution

- Network not follow the atmosphere scattering model

- demonstrate the utility and effectiveness of GFN

- multi-scale approch to eliminate halo artifacts

1.4. Related Work

1.4.1. Multiple-image aggregation

1.4.2. Hand-crafted Priors Based Methods

- maximize the contrast

- dark channel

- color-lines

- non-local

- fusion luminance, chromatic and saliency maps

1.4.3. Data-driven Methods

- combine four feature with Random Forest

- color attenuation prior

- deep learning

1.5. Dataset

A∈(0.8, 1.0), β∈[0.5, 1.5]. random sample 7 groups, add 1% Gaussian noise.

- Train. 1400 images x7

- Test. remained 49 images x7

- RESIDE dataset. benchmark

2. Network

2.1. White Balance Input

- recover the latent color of the scene and eliminate chromatic cast

- but still present low contrast

2.1.1. Gary World Assumpation

the average value of the R,G,B components should average out to a common gray value. (Link)

2.2. Contrast Enhanced Input

- subtracting the average luminance value

- dark image region tend to black

2.3. Gamma Corrected Input

- overcome the dark limitation of CE

2.4. Network

- dilation Conv

- Relu

- 3 Conv + 3 DeConv, stride 1

- first layer 5x5, other 3x3x32

2.5. Multi-Scale Refinement

- vary the image resolution (x2) to preserve halo artifacts

- loss function

- motivation

the human visual system is sensitive to local changes (e.g., edges) over a wide range of scales. As a merit, the multi-scale approach provides a convenient way to incorporate local image details over varying resolutions.

2.6. GAN Loss

apply to finest image.

2.7. Total Loss

3. Experiments

3.1. Details

- 128x128 patches, batch size 10, 240,000 iteration

- Adam, 0.0001 LR, LR decay

- weight decay 0.00001

- train 35 hours on K80

3.2. Comparison

light, medium and heavy (β=0.8, 1.0, 1.2).

3.3. Ablation Study

Multi-scale

Gated Fusion

3.4. Limitation

- can not handle very large fog